Is Your Data Warehouse a Mess? Here’s a Practical Path to Clean It Up

One of the most common issues in many organizations and data teams is a messy and disorganized Data Warehouse. This problem usually develops over time due to rapid team growth, evolving analytics needs, onboarding new members without proper documentation, and an increase in temporary projects.

The result? A chaotic data warehouse with inconsistent structures, duplicate or unused tables, outdated ETL scripts, and dependencies no one really understands anymore. In such situations:

- Queries become slow and expensive

- Analysts struggle to find reliable data

- There’s no coherent data model

- Maintenance and development become costly and time-consuming

The goal of this post is to provide a practical, technical roadmap for rebuilding Data Warehouses suffering from serious infrastructure issues — such as outdated and scattered ETL layers, inconsistent table design, conflicting data across sources, and lack of data quality and testing mechanisms. Cleaning up isn’t just about deleting unused tables or organizing files; it requires a rethinking of the data model, decoupling transform layers, live documentation, and automated data quality tests.

We’ll walk through a step-by-step cleanup process based on hands-on experience — from auditing data flows, removing ineffective assets, to redesigning models and building scalable pipelines.

How to Know If Your Warehouse Is a Mess

When someone says a Data Warehouse is “messy,” they usually mean the table structures don’t make sense, there’s no documentation, ETL code is scattered and outdated, and no one knows what data comes from where or goes where. In these environments:

- Queries are slow and resource-hungry

- Analysts constantly get lost trying to find the right data

- Redundant, incomplete, or meaningless data is everywhere

- The team has no clear view of the data flow

If this sounds familiar, you’re not alone. Many systems that have grown fast end up in the same situation. Let’s look at how to handle it.

Step 1: Understand the Current State

Before making any changes, you need a clear picture of the current situation. And that’s more than just documentation — it’s a full data warehouse audit:

- What tables exist and how often are they used?

- Where do ETL pipelines start and how do they flow?

- What are the dependencies between tables?

- What scripts and tools are involved?

Simple tools work well at this stage. I usually collect key info in a Google Sheet and talk to people who know (based on history or their current role). Tools like dbt docs can also help visualize and explore the current state.

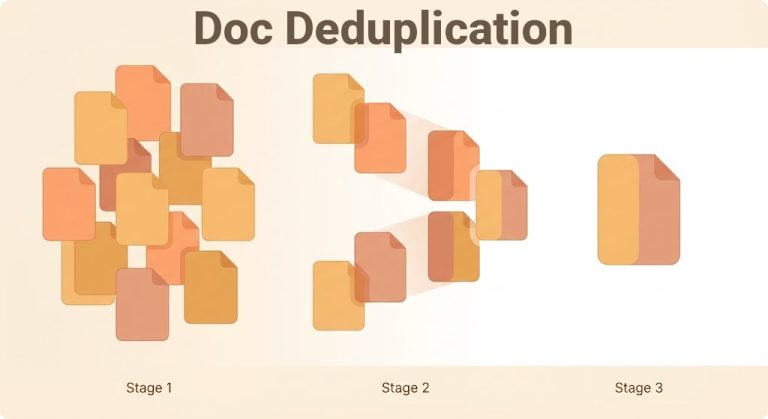

Step 2: Identify Digital Trash

Now it’s time to list dead tables, unused ETL scripts, and redundant fields. These are often remnants from years ago that no one touches anymore.

- Query logs can help find tables that haven’t been used in months

- Cronjobs or Airflow tasks whose outputs are ignored are good candidates for removal or refactoring

- Some fields are always filled but meaningless — or you might have duplicate fields with different names

Think of them as digital junk in your garage — they take up space and cause confusion.

Step 3: Redesign the Data Model

One of the most important parts of cleanup is modernizing the data model. This is where data engineering shifts from execution to design.

A domain-driven model is much more effective than flat, ad-hoc structures. For example, instead of ten different sales tables for ten countries, a single, well-structured sales model with contextual metadata is easier to maintain and scale.

If you’re using dbt (which I highly recommend), you can implement layered modeling (staging, intermediate, mart) and generate structured, automated documentation.

Step 4: Test and Assure Data Quality

One major issue with legacy data warehouses is the lack of data quality checks. Incomplete, inconsistent, or outdated data can derail entire analyses. You don’t need fancy tools — simple SQL-based tests can catch many problems.

For instance, if you have an orders table, you’d expect every order to be tied to a customer. A basic test might look like this:

SELECT COUNT(*) AS invalid_orders

FROM orders

WHERE customer_id IS NULL;

These lightweight tests can form daily or weekly checklists. By running them regularly, you’ll catch most issues before they reach reports or decisions. If you’re using Airflow or cronjobs, you can even automate them and log the results.

Later on, you can store these results and build dashboards in Metabase or Grafana to monitor data quality over time.

Step 5: Maintain Live and Real Documentation

Another common pain point is outdated or missing documentation. This creates dangerous dependencies on specific people.

Documentation shouldn’t be an afterthought. It should be part of the process from day one. Tools like DataHub, Metaplane, or dbt docs make this easier. But they don’t replace a well-written, human-friendly schema explanation. Even a solid Notion or Confluence workspace can work wonders.

Final Thoughts: It’s a Project, Not a One-Day Fix

Cleaning up a messy data warehouse doesn’t happen overnight. It’s a project — sometimes one that spans months — and it requires buy-in from everyone involved. But the good news is: when it’s done…

- The analytics team works faster and with more confidence

- Resource usage drops

- System development becomes easier, and new members onboard faster

If you’re dealing with a chaotic data warehouse and want to breathe easier, I’d be happy to help. Whether you’re looking for advice or want to start a cleanup project, feel free to reach out using Linkedin.

Good luck — and may your data always be clean and trustworthy!