Essential Skills Every Data Engineer Needs

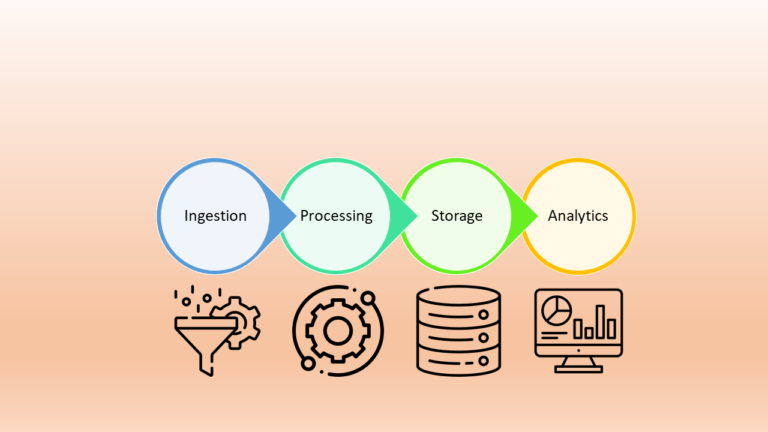

If you’re stepping into the world of data engineering—or already deep in it—you know it’s more than just moving data from A to B. It’s about designing efficient systems, optimizing performance, and ensuring data is accessible to those who need it. With data becoming the backbone of decision-making in businesses, data engineers are the architects behind the scenes, making it all possible.

But what separates a good data engineer from a great one? In this post, I’ll break down the five most essential skills every data engineer should master—going beyond just a list of tools to focus on the fundamental knowledge that truly matters.

1- SQL – The Backbone of Data Engineering

No matter how many new technologies emerge, SQL (Structured Query Language) remains the foundation of data engineering. It’s the universal language for working with databases, and mastering it is non-negotiable.

Whether you’re handling relational databases like PostgreSQL, MySQL, or enterprise systems like Oracle and SQL Server—or working with cloud-based data warehouses like Google BigQuery, Snowflake, or Amazon Redshift—writing efficient SQL queries is a must.

What You Should Know:

- Writing complex queries with joins, aggregations, and subqueries.

- Designing normalized and denormalized schemas.

- Using window functions for advanced analytics.

- Optimizing query performance through indexing and execution plans.

- Building ETL pipelines with SQL-based tools.

To level up, practice writing queries on large datasets. Many optimization techniques, such as indexing and query analysis, only become noticeable at scale.

2- Programming – Python or Scala? Why Not Both?

SQL is great for querying data, but as a data engineer, you’ll need programming skills to process, transform, and automate workflows.

Why Python?

Python is the go-to language for data engineering due to its simplicity, vast ecosystem, and strong community support. Libraries like Pandas, NumPy, and SQLAlchemy make it ideal for data manipulation and ETL tasks. Plus, it integrates seamlessly with orchestration tools like Apache Airflow. There is a post here to know more about some other python libraries.

Why Scala?

Scala is the backbone of Apache Spark, one of the most powerful big data frameworks. If you’re working on large-scale distributed processing, knowing Scala can be a game-changer.

What You Should Know:

- Writing clean, reusable code for ETL jobs.

- Automating workflows with Python scripts.

- Distributed data processing with PySpark or Scala Spark.

- Error handling and logging for reliable pipelines.

Start with Python for small-to-medium pipelines, then explore Scala if you’re diving into big data frameworks. Even within big data, Python (with tools like Polars or PySpark) remains a strong choice, but knowing some Scala can open more opportunities.

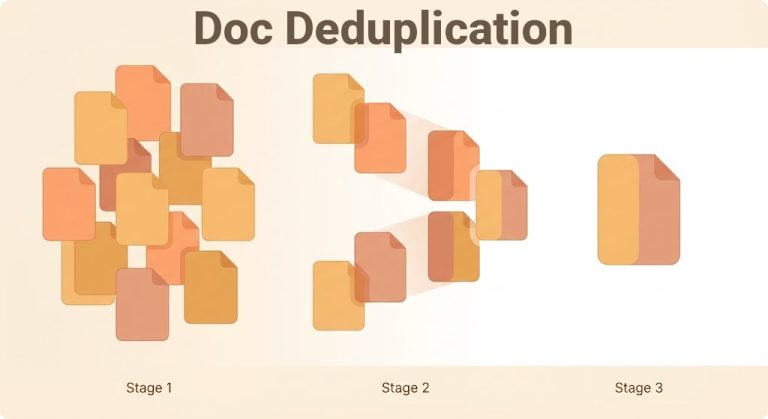

3. Data Modeling – The Art of Structuring Data

Raw data isn’t useful until it’s structured properly. As a data engineer, designing efficient data models is crucial for performance, cost optimization, and usability.

What You Should Know:

- Designing Star and Snowflake schemas for analytical workloads.

- Understanding the difference between OLTP and OLAP systems.

- Building scalable data lakes and data warehouses.

- Implementing partitioning strategies for large datasets.

- Balancing normalized vs. denormalized data models.

The right data model improves query performance, reduces storage costs, and makes data more accessible to analysts and data scientists.

4. Distributed Data Processing – Handling Big Data at Scale

When working with massive datasets, a single machine won’t cut it. That’s where distributed computing frameworks like Apache Spark come in.

What You Should Know:

- Writing Spark jobs in Python (PySpark) or Scala.

- Partitioning data for efficient parallel processing.

- Optimizing performance using lazy evaluation and caching.

- Working with distributed storage systems like HDFS, Kafka, or cloud-based storage.

- Troubleshooting performance bottlenecks in distributed pipelines.

Understanding distributed systems is what sets modern data engineers apart.

5. Data Orchestration – Automating and Monitoring Pipelines

Once your data pipelines are built, they need to run reliably without manual intervention. That’s where orchestration tools like Apache Airflow come in.

Airflow allows you to schedule, monitor, and retry workflows automatically. Its DAG-based approach makes it easy to visualize dependencies and debug issues, especially when revisiting a pipeline after some time.

What You Should Know:

- Setting up DAGs (Directed Acyclic Graphs) in Airflow.

- Implementing retry policies and alerting.

- Managing task dependencies to avoid conflicts.

- Logging and monitoring pipeline health.

- Deploying and scaling pipelines in cloud environments.

Airflow’s XComs feature allows data passing between tasks, adding flexibility to your workflows. One common beginner mistake is ignoring DAG run dependencies, which can lead to execution conflicts—so be mindful of that when designing pipelines.

In your career path as a data engineer, you also need to consider issues related to starting with a large amount of data. I refer to this state as “cold starting” in a data pipeline, and you can read a related article here.

Final Thoughts

Data engineering is one of the fastest-growing and most exciting fields, but success isn’t just about knowing the latest tools. It’s about understanding how data flows through a system, optimizing performance, and designing scalable architectures.

If you’re starting out or looking to level up, focus on these five core skills and continuously refine them. Strong foundations in SQL, programming, data modeling, distributed systems, and orchestration will make you a reliable and highly sought-after data engineer.

What do you think? Are there other must-have skills for data engineers? Drop a comment—I’d love to hear your thoughts!