From Crisis to Stability: Backing Up and Protecting Data in Times of Peace

A few weeks ago, in the chaos of war and crisis, I talked to you about the importance of backups. From pg_dump and mysqldump to mongoexport and rsync; about how we can prevent data loss during critical situations. Now that things have calmed down a bit, it’s time to revisit the topic. In the previous post, I focused more on how to pull the emergency parachute cord. In this one, I want to show how we can keep our data even safer—something like using special safety locks. :

1. Scheduled and Automated Backups

If during the wartime you used quick scripts to take backups, now it’s time to automate the process.

In my opinion, using tools like cronjob + bash script is a simple yet effective approach. For example:

crontab -e

0 2 * * * pg_dump -U your_username -d mydb > /backups/pg/mydb_$(date +\%Y\%m\%d_\%H\%M\%S).sql 2>> /var/log/pg_backup.log

Of course, there are other tools like Barman and Percona too, which I personally haven’t tried yet. I’d be glad if you share your experience with any of these.

While scheduling backups, don’t forget to create a standard folder structure and traceable naming convention. A good backup file speaks for itself—who it belongs to and where it came from. Proper naming also helps manage disk space, which I’ll mention later.

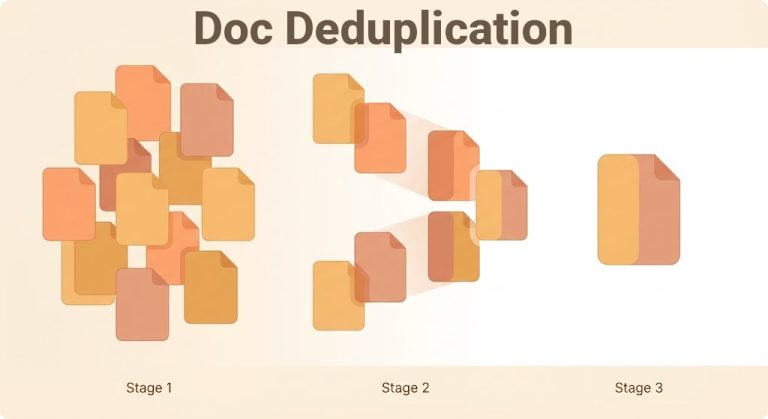

2. Versioning and Retention Strategy

Having just one backup file is not helpful—especially in cases of human error or ransomware attacks. You should keep at least the last three versions and delete the older ones.

For example, you can use find and mtime to remove backups older than 7 days:

find /backups/pg/ -type f -name "*.sql" -mtime +7 -deleteHow long you keep your backup files depends on your company’s policies, regulations, limitations, and the importance of the data

3. Incremental Backups: Smart and Efficient

Full backups are essential, but running them daily on large datasets can waste time and resources. That’s why you should consider options like Incremental Backup and Differential Backup. Let me briefly explain the difference:

First, remember: both methods rely on having a full backup done beforehand.

- Incremental: Each backup stores only changes since the last backup—whether it was incremental or full.

- Differential: Each backup stores changes since the last full backup only.

While incremental backups are faster and use less storage, they require more files to restore. In contrast, differential backups require just two files: the full backup and the latest differential file

4. Restore Testing

It would be pretty painful if you’ve taken backups but run into a crisis before ever verifying that they actually work.

Make a habit of regularly testing your backups by restoring them in a test environment. This way, you’ll ensure that you haven’t just wasted time and storage space. 🙂

5. Don’t Forget Geography

During crises, the location of your backups becomes crucial. If they’re stored on the same server that’s at physical or network risk, they’re basically useless.

Now that it’s peacetime, plan for geo-distributed backups.

I suggest keeping:

- One local copy

- One on a remote data center

- And if possible, one in object storage (like S3 or similar services)

6. Document Your Processes

In crisis mode, speed in recovery is everything. Personally, I don’t have the best memory, which is why I love documentation. This way, even things I forget are written somewhere and can be quickly looked up.

Also, in such conditions, your teammates are often busy with other urgent tasks. You might be the one who needs to restore a service you weren’t previously involved in—there’s no time for questions and answers. So don’t skip documentation

Final Words

We’re ordinary people. Unlike politicians, we’re not looking for war—not in words, not in action. But as programmers and data engineers, we’re responsible for the protection and security of our data and systems. Backups may seem like a small thing—but when the time comes, they help us a lot.

So don’t neglect them. 🙂