Key Principles of Data Modeling: A Roadmap to Success in the Big Data Era

Data is the lifeblood of organizations. Yet, throughout my career, I’ve often encountered the reality that having data alone isn’t enough. What truly matters is how we organize and effectively use that data. This is where the art and science of data modeling come into play. Data modeling isn’t just a technical process—it’s a deep, thoughtful approach to understanding and mapping data. This mindset helps us build efficient, reliable, and scalable systems.

In this post, we’ll explore the principles of data modeling and why this skill is essential for every data engineer. My goal is to provide you with a clearer understanding through deeper insights and practical examples.

What Is Data Modeling, and Why Is It So Important?

Simply put, data modeling is the process of defining and organizing a system’s data infrastructure. It involves creating a conceptual representation of how data is structured and related within a database. This “map” isn’t just for developers—it’s also understandable to business stakeholders, improving data comprehension and enhancing data quality.

The importance of data modeling becomes even more apparent when working with large, complex projects. Without proper planning for data organization, we may face issues like poor performance, frequent errors, and the need for costly code rewrites—all of which waste time and resources. Data modeling helps us design robust data systems from the outset.

Three Levels of Data Modeling: From Concept to Implementation

Data modeling typically occurs at three levels, each offering a different degree of detail. Understanding these levels helps us communicate effectively with various stakeholders (technical and non-technical). I also recommend reading this article to better grasp business needs.

1. Conceptual Data Model

This level focuses on concepts and rules. It identifies key entities and their relationships without delving into technical details like database types.

Practical Example: Suppose we’re modeling data for a smart home. At the conceptual level, the main entities are:

- Home

- Room

- Sensor

- Temperature

- Energy Consumption

Conceptual relationships might include:

- A home contains multiple rooms.

- Each room has one or more sensors.

- A sensor produces multiple temperature readings over time.

2. Logical Data Model

This model adds more detail than the conceptual one but remains independent of any specific database management system (DBMS). Here, we define attributes for each entity (e.g., room name, sensor type), specify data types, and describe relationships in greater detail (e.g., one-to-one, one-to-many).

Practical Example (Continuing the Smart Home Scenario):

In the logical model, we add attributes:

- Home: Home ID (Primary Key), Address

- Room: Room ID (Primary Key), Room Name, Home ID (Foreign Key linking to Home)

- Sensor: Sensor ID (Primary Key), Sensor Type, Room ID (Foreign Key linking to Room)

- Temperature: Temperature ID (Primary Key), Temperature Value, Unit, Timestamp, Sensor ID (Foreign Key linking to Sensor)

- Energy Consumption: Energy ID (Primary Key), Consumption Value, Unit, Timestamp, Home ID (Foreign Key linking to Home)

Relationships are also refined—for example, the “one home contains many rooms” relationship is defined as one-to-many between Home and Room.

3. Physical Data Model

This is the final level, detailing technical aspects of data storage and access within a specific DBMS. Here, entities become tables, attributes become columns, and we define data types, indexes, primary/foreign keys, and constraints.

Practical Example (Continuing with PostgreSQL as the DBMS):

- Homes Table: Columns:

home_id(INTEGER, PRIMARY KEY),address(VARCHAR) - Rooms Table: Columns:

room_id(INTEGER, PRIMARY KEY),room_name(VARCHAR),home_id(INTEGER, FOREIGN KEY referencing Homes) - Sensors Table: Columns:

sensor_id(INTEGER, PRIMARY KEY),sensor_type(VARCHAR),room_id(INTEGER, FOREIGN KEY referencing Rooms) - TemperatureReadings Table: Columns:

reading_id(INTEGER, PRIMARY KEY),temperature_value(DECIMAL),unit(VARCHAR),timestamp(TIMESTAMP),sensor_id(INTEGER, FOREIGN KEY referencing Sensors) - EnergyConsumption Table: Columns:

consumption_id(INTEGER, PRIMARY KEY),consumption_value(DECIMAL),unit(VARCHAR),timestamp(TIMESTAMP),home_id(INTEGER, FOREIGN KEY referencing Homes)

We might also partition data or create indexes on timestamp columns in TemperatureReadings and EnergyConsumption and on room_id in Sensors to optimize query performance.

Key Principles of Data Modeling

Now that we’ve covered the levels of data modeling, let’s look at some fundamental principles crucial for data engineers:

Align with Business Goals

Your data model should reflect business reality and needs. As data engineers, we can sometimes get lost in technical details and lose sight of the big picture. Close collaboration with business analysts and stakeholders ensures alignment with business objectives.

Proper Documentation

Even the best model is useless if it’s not understandable. Clear, up-to-date documentation helps teams know what data is available and how to use it, speeding up onboarding and simplifying future maintenance.

Design for Adaptability

Rarely do we get the model perfect on the first try. Data landscapes evolve, and new requirements emerge. Your model should be designed for easy updates with minimal disruption—flexibility today saves headaches tomorrow.

Choose the Right Modeling Technique

Different data models exist (relational, dimensional, Data Vault, Activity Schema). The right choice depends on your data type and workload:

- Relational modeling suits customer-facing services.

- Dimensional modeling (Fact and Dimension tables) is ideal for analytical queries (read more…).

- Schema-on-Read and columnar formats (Parquet, Avro) work best for Data Lakes requiring flexibility (read more…).

- Data Vault excels for large, evolving datasets needing auditability.

- Activity Schema is great for real-time streaming data analysis (read more…).

Success lies in understanding each technique’s strengths and selecting the best fit for your use case.

Prioritize Data Governance & Security

With growing privacy and security concerns, your modeling process must account for data protection, access management, and governance strategies. A strong governance framework ensures accuracy, consistency, and trust in your data.

Optimize for Big Data

For massive datasets, advanced techniques like columnar storage (Parquet, ORC), indexing, and partitioning (by date, ID, or region) dramatically improve query performance and storage efficiency. Incremental modeling—processing only new or changed data—also enhances efficiency.

Avoid Premature Optimization

While optimization matters, it shouldn’t hinder development. Sometimes, it’s better to make core decisions correctly first, then refine the model iteratively based on feedback. Keep it simple; optimize only when scaling becomes an issue.

Example: Modeling for Data Lakes vs. Data Warehouses

Suppose your organization has both a Data Lake (for raw, variable data) and a Data Warehouse (for structured reporting and analytics).

- In the Data Lake:

Use Schema-on-Read. Raw data (JSON, CSV, Parquet) lands in storage (e.g., AWS S3). Modeling is less rigid, focusing on file organization and metadata catalogs. Columnar formats like Parquet boost performance for analytical tools (e.g., Spark). - In the Data Warehouse:

Data is cleaned, transformed, and structured. Apply stricter models like Dimensional Modeling or Data Vault. Fact and Dimension tables enable efficient analytical queries. Primary/foreign keys, indexes, and constraints enforce data integrity.

This example shows how modeling depends on the data’s purpose and processing stage. A skilled data engineer selects the right approach for each part of the architecture.

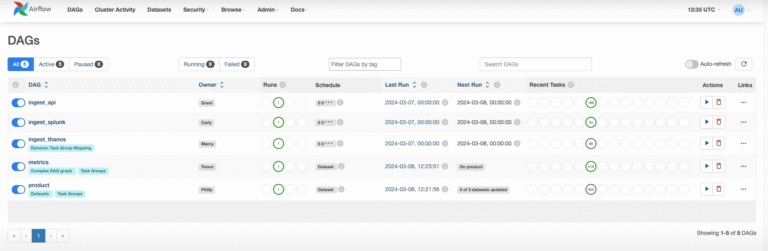

Tools and Processes

Various tools assist with data modeling, from ERD diagramming tools to comprehensive metadata platforms. I often use Draw.io for ERDs (let me know if you know better tools!). But more important than tools is having a repeatable process and fostering collaboration to create the right initial design.

Challenges and Limitations

Data modeling isn’t without challenges—it can be time-consuming and complex. Overly rigid models may lack future flexibility. Yet, the long-term benefits far outweigh these hurdles.

Final Thoughts

For data engineers, data modeling isn’t just a step in database design—it’s a philosophy for understanding, organizing, and preparing data for effective use. Mastering it empowers you to work with data confidently and efficiently, helping your organization extract maximum value.

As data continues to grow, your expertise in data modeling will be invaluable. I hope this article has been helpful and inspires you to dive deeper into this critical skill. If you have questions or experiences to share, I’d love to hear them!